Commentators lauding and panning iOS6 maps are focussing on the core maps experience—for good reason—but it’s the Siri integration that’s worth paying most attention to.

Commentators lauding and panning iOS6 maps are focussing on the core maps experience—for good reason—but it’s the Siri integration that’s worth paying most attention to.

The core maps scale with money and time – both of which Apple has ample amounts of. More planes will fly, more servers will crunch data, updates will be deployed faster to the web service. Nothing a billion dollars and a couple of years can’t fix.

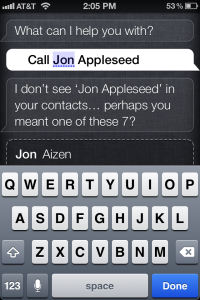

Siri as your Navigator

It’s the Siri experience that I’m most psyched about. It is, after all, something I predicted in November 2011 – in a post aptly titled ‘Siri-based navigation is coming soon‘. It only took a year!

It’s a verbose post that maps out why Siri with a simple premise:

Typical car travel is a two-person activity – one to steer, and another to navigate. Sure, you can make-do with just one person fumbling with a GPS devices while trying to drive. But imagine a future where Siri becomes that navigator – skillful, omniscient, helpful and entirely hands-off.

The future is, of course, here. It’s a testament to Apple’s genius that when the future arrives, it hits you with a ‘well, duh‘ realization.

My personal experience with Siri as Navigator

This morning, when I left home, I asked Siri: “Take me to work“. And she did. I did not look at the iPhone even once (well OK, I did, but not to peek at the directions), and Siri even re-routed me when I hit traffic. I even asked her “Are there any gas stations near the route” and she found some.

Funnily enough, when providing directions to the next gas station, one of the options she offered was “Find the next one“. She understands you might just have missed the exit to the first one. That’s smart!

Then I asked her “Is there a Starbucks near my destination?“. Siri couldn’t answer this – but it wasn’t so bad! She said “Sorry, I can’t find places near a business“. In other words, she understood what I was asking, but just didn’t know how to answer just yet. Of course, this will change in the future.

And this is where the data comes in

When Apple says they’ll get better with usage – don’t be fooled. The usage won’t actually improve the visual map experience that much – they probably already knew that Brooklyn Bridge don’t look so good. And they’ve already had the traffic data pumping in from the previous incarnation for years.

No, this is about Siri. As Apple gets real-world usage of a completely voice-drive application, because it needs to be, and it practically begs to be, they’re developing the deepest, most comprehensive understanding of how travel gets done, not just how maps look and feel.

So this is classic Apple – focus on learning how to make things better, not just copying what already exists.

Roadmap for Siri

Siri, as navigator, of course needs a roadmap. Based on my previous blog post, here’s a suggested roadmap for Siri actions. My humble suggestions for the product manager for this feature at Apple (or for the corresponding person at Google + Google Now, natch!)

- [done] Spoken directions with Siri’s voice – “Next turn in 300 feet”

- [done] Routing and rerouting commands, using Contacts information – “Take me home”

- [done] Gas nearby – “Are there any gas stations near the route”

- [untested] Adding gas stations as waypoints – “Siri: Added Shell gas station as waypoint”

- Send message about current route to someone – “Let Abha know when I’ll reach home”

- Notice unexpected slowdown and proactively suggest rerouting – “Siri: We seem to be stuck in traffic, but I have an alternate routing suggestion for you”

- Find parking spot at destination – “What are the parking options at the destination?”

- Nirvana – “Siri, let’s go home, but stop by Pete’s laundry and the Safeway near our home on the way”

Now, if only Siri could understand my accent well…

Amit